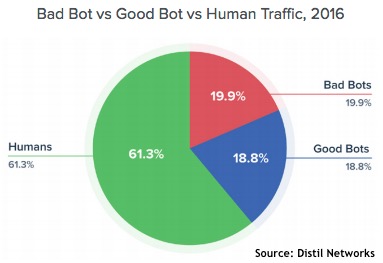

In 2016, 40% of all web traffic originated from bots — and half of that came from bad bots. A bot is simply a software application that runs automated tasks over the internet. Good bots are beneficial. They index web pages for the search engines, can be used to monitor web site health and can perform vulnerability scanning. Bad bots do bad things: they are used for content scraping, comment spamming, click fraud, DDoS attacks and more. And they are everywhere.

Findings from Distil’s 2017 Bad Bot Report (PDF) released Thursday show that the problem is rising again after a brief improvement in 2015. In 2015 bad bots represented 18.61% of all web traffic. This is down from 22.78% in 2014, but has risen to 19.90% in 2016. These figures come from an analysis of hundreds of billions of bad bot requests, anonymized over thousands of domains.

Bad bots especially target web sites with proprietary content and/or pricing information, a login section, web forms, and payment processing. Ninety-seven percent of websites with proprietary content and/or pricing are hit by unwanted scraping; 90% of websites were hit by bad bots in 2016 that were behind the login page; and 31% of websites with forms are hit by spam bots.

Sophisticated bots, which Distil describes as “advanced persistent bots” or APBs, can load JavaScript, hold onto cookies, and load external resources. They are persistent, and can even randomize their IP address, headers, and user agents. In 2016, 75% of bad bots were advanced persistent bots, Distil says.

Bots attack the application layer, so the traditional defense has always been the web application firewall (WAFs). It’s a good start says Distil, but not enough. WAFs are good at blocking bad IPs, and can geo-block whole regions. While this could block, for example, China and Russia (if the site in question doesn’t do business with China and Russia), more than 55% of all bot traffic originates from within the US.

This doesn’t mean that the bot operators are mostly American citizens. “Unlike the criminals of yesteryear who needed to be physically present to commit crimes, cyber thieves have technology to do their bidding for them. Sure, a spammer bot might originate from the Microsoft Azure Cloud, but the perpetrator responsible for it could be located anywhere in the world.”

While blocking entire counties (Russia and China, for example) may be a feasible defense, bot origination from friendly nations such as The Netherlands (11.4%) is almost twice that of China (6.1%). Blocking individual known bad IPs is also problematic. “Bad bots rotate through IPs, and cycle through user agents to evade these WAF filters,” warns Distil.

“You’ll need a way to differentiate humans from bad bots using headless browsers, browser automation tools, and man-in-the-browser malware,” it adds. “52.05% percent of bad bots load and execute JavaScript — meaning they have a JavaScript engine installed.”

The failure of WAFs to adequately block bad bots has contributed to the increase in application layer DDoS attacks. Volumetric DDoS (itself a rapidly growing problem due to botnets harnessing the power of the internet of things) simply flood the website until further access is impossible. However, this is a Layer 3 that attack that can be easily spotted and, with sufficient planning, mitigated.

“In contrast,” notes Distil, “an application denial of service event occurs when bots programmatically abuse the business logic of your website. This happens at layer seven, so you won’t notice it on your firewall and your load balancer will be just fine. It’s the web application and backend that keels over.”

A simple example of this type of attack is found in WordPress websites. A bot adds ‘web-admin.php’ (it could be anything that waits for further user input) to a legitimate page URL, and then sends repeated access requests while cycling through multiple IPs. This rapidly leads to the consumption of all available channels — and the site is effectively down.

The threat from bad bots should not be underestimated — their nuisance value alone can cause problems. Content scraping and reposting elsewhere will lower search engine scores and affect good traffic. They can skew traffic analytics, providing web analytics with false information and potentially leading to false assumptions and misguided future planning.

But many bots have malicious intent from the beginning — for example, bad bots are one of the primary methods of testing stolen credentials. “In 2016,” says the report, “95.8% of websites fell prey to account credential bots on their login page. In other words, if you sample any group of 100 websites that contain a login page, 96 of them will have been attacked in this manner… With billions of stolen login credentials available on the dark web, bad bots are busy testing them against websites all over the globe.”

“Massive credential dumps like Ashley Madison and LinkedIn,” says Rami Essaid, CEO and co-founder of Distil Networks, “coupled with the increasing sophistication of bad bots, has created a world where bad bots are running rampant on websites with accounts. Website defenders should be worried because once bad bots are behind the login page, they have access to even more sensitive data for scraping and greater opportunity to successfully carry out transaction fraud.”

There is no easy solution to the threat from bad bots. Distil recommends several options, such as “geo-fence your website by blocking users from foreign nations where your company doesn’t do business”; whitelist current or recent browser versions and block older versions from accessing the site; and consider “creating a whitelist policy for good bots and setting up filters to block all other bots — doing so blocks up to 25% of bad bots.”

Doing nothing is not a realistic option, because “you won’t see the next bad bot attack coming even though it’s all over your site.”